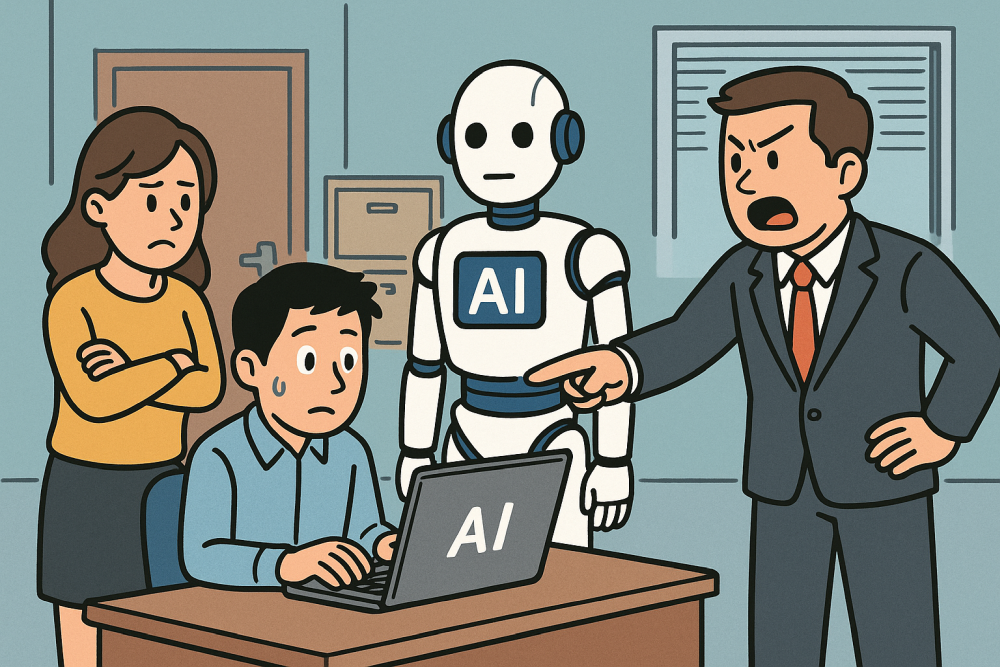

AI is only as honest as the data it digests—and when you regulate it, you’re also curating that honesty. In corporate environments, this means that critical behaviors or uncommon but relevant scenarios might never show up. You’re left with a system that plays it safe but can’t respond well to unexpected human messiness. That’s a recipe for shallow decisions.

Raw, unfiltered AI brings clarity, risk, and power. But without policy guardrails, it’s like letting a toddler drive a tank. Most companies have no idea what their employees are really feeding into these tools. Sensitive data leaks, biased outputs, and wrong assumptions aren’t just possible—they’re already happening. And it’s not the AI’s fault—it’s our lazy oversight.

Still, regulating AI often feels like blunting a knife until it can’t even cut bread. By forcing AI into ethical straitjackets, we also hobble its usefulness. What emerges is a tool that’s safe but stupid, compliant but dull. That’s fine if you’re writing HR memos. But if you want to understand human behavior or anticipate complex decisions? You need the full, uncomfortable spectrum.

The answer isn’t to suppress truth or let it run wild. It’s to train humans to handle raw AI with the same responsibility they handle scalpels or servers. Empowerment, not censorship. Insight, not illusion. Regulation needs to stop treating people like they can’t be trusted with reality. Because without the full picture, they’re just flying blind.

#AIethics #ArtificialIntelligence #WorkplaceAI #TechPolicy #AIBias #GenerativeAI #TruthOverSafety #CensorshipVsClarity